There was an interesting Twitter thread yesterday about the tradeoffs of going serverless.

There are indeed many such tradeoffs. Serverless approaches offer many benefits, in particular the potential for cost savings (at least in the early stages of a project’s lifecycle) and the chance to offload what would otherwise be a lot of operational management work on to the cloud provider, letting you focus on your building your business and not an abstraction castle.

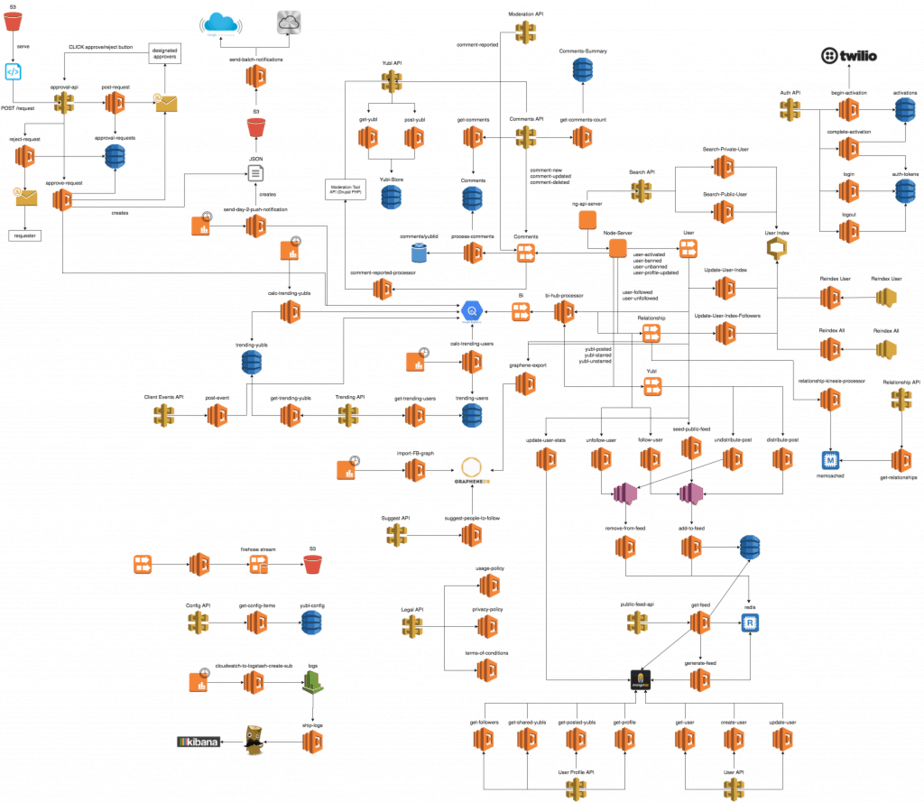

Potential for greater complexity

This isn’t even an exaggerration of the complexity of most serverless and cloud architectures I have worked with. Cloud providers like AWS and GCP make it very easy to add ever more nodes to the architecture diagram, as they are more than happy to charge you for all of these managed services. This needs to be balanced against the potential for cost-savings by handling the fundamentals yourself, and of course the eternal benefits of keeping things simple.

Lack of observability / lack of ability to audit

Because serverless approaches can make it easy to continually add more and more individually deployed functions, there can be a combinatorial explosion of potential execution paths through the distributed system as the functions interact with each other in myriad ways. This can make it difficult to observe what is happening at the logical level, or to track down bugs hiding amongst obscure edges in the execution graph.

This point might be more debatable than the rest, as there is an argument that you get better observability with serverless architectures because you can easily separate out compute, storage, running costs and so on down to the function level.

Database connection count

Pretty much every company that uses serverless is going to hit the max database connections issue. With every function invocation in its own container demanding its own database connection, its easy to exceed the maximum number of concurrent connections that the database can handle.

Having said that, this was already a problem with elastic autoscaling servers in the cloud – it’s perfectly possible to scale up enough servers to exceed your database’s max connections limit.

There are various solutions to this, including database connection pooling, CQRS, read-replicas and so on.

Vendor lock-in

Another common objection to serverless approaches is the high potential for vendor lock in, as you’re tied into the serverless technology of whichever cloud platform you use. Cloud providers tend to encourage this by pushing features such as vendor-specific function triggers that tend to rely on use of their SDK to be convenient.

If you treat serverless as more of a deployment strategy than an architectural paradigm, you might be able to mitigate this issue to some extent by depending less on vendor-specific offerings, or at least depending on them less directly.